专业做化学招聘的网站有哪些杭州网站定制开发

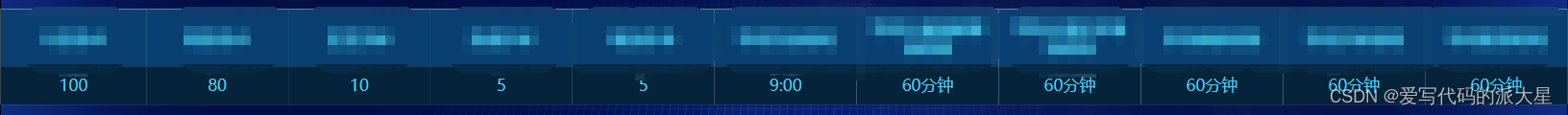

.2023.11.21今天我学习了如何对el-table表格样式进行修改,如图:

运用的两个样式主要是

1.header-cell-class-name(设置表头)

2.class-name(设置行单元格)

代码如下:

<el-table :data="typeList"class="real_operation_table":header-cell-class-name="'header_name_style'"><el-table-column :class-name="'all_cell_style'" align="center" v-for="(value, key) in typeList[0]" :key="key":prop="key"><template slot="header" slot-scope="scope"><span>{{ value.name }}</span></template><template slot-scope="scope"><span>{{ scope.row[key].value }}</span></template></el-table-column></el-table>.el-table如果有class记得换成自己的类名 ,没有就直接用el-table

//添加表头表格颜色

::v-deep .header_name_style {background-color: rgb(4, 62, 114) !important;color: #4cd0ee;font-size: 20px;

}//添加单元格背景颜色

::v-deep .all_cell_style {background-color: rgb(5, 35, 61);color: #4cd0ee;font-size: 20px;

}//去掉表格底部边框

.real_operation_table::before {width: 0;

}//去掉单元格边框

::v-deep .real_operation_table .el-table__cell {border: none !important;

}::v-deep .el-table--scrollable-y .el-table__body-wrapper {background-color: rgb(5, 35, 61);

}::v-deep .real_operation_table .el-table__cell.gutter {background-color: rgb(6, 71, 128) !important;

}//鼠标移入效果

::v-deep.real_operation_table {// 每行鼠标经过得样式.el-table__body tr:hover > td {background-color: rgb(5, 35, 61) !important;}.el-table__body tr.current-row > td {background-color: rgb(5, 35, 61) !important;}

}// 滚动条样式

::v-deep ::-webkit-scrollbar {width: 0.4vh;

}::v-deep ::-webkit-scrollbar-track {background-color: transparent;

}::v-deep ::-webkit-scrollbar-thumb {background-color: rgb(68, 148, 220);border-radius: 4px;

}//去掉表格背景颜色

::v-deep .el-table {background-color: transparent !important;

}